There’s an old joke among people in the display industry about “Lies, damn lies, statistics … and ANSI lumens”. Sometimes it seems that it doesn’t matter what you do, specifications get bent and stretched by those that are trying to exploit them. Does that mean that you should stop trying to improve them? I don’t think so.

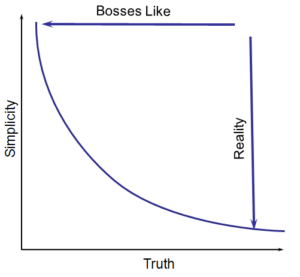

Many years ago, I heard a talk by (if I remember correctly) Peter Cochrane, a ‘futurologist’ and at that time (again iirc) he was CTO of BT in the UK. He showed a diagram that I have used in talks many times since. The real world is often complex and messy – and hard to put into the simple terms that bosses and customers would like to see. Buyers and bosses have to make decisions, so they like clear and simple situations.

The reality is usually much more messy and nuanced and a single simple number or answer is rarely enough to really describe a situation.

The same is, broadly true of specifications. The simple answer may not give you the messy truth. As we have discussed before in these columns, in some detail, ANSI lumens really don’t tell you much at all about how good a projector will look when it’s showing a real image, especially an image of the real world in a video or photograph. The ANSI specification doesn’t help you understand the issue of colour quality, which is so crucial, and which is why I am persuaded that CLO (Colour Light Output) or measuring output in sRGB are much better ways of specifying projector output. (Is There a New Number for Projector Performance?)

Resolution is Also Tricky

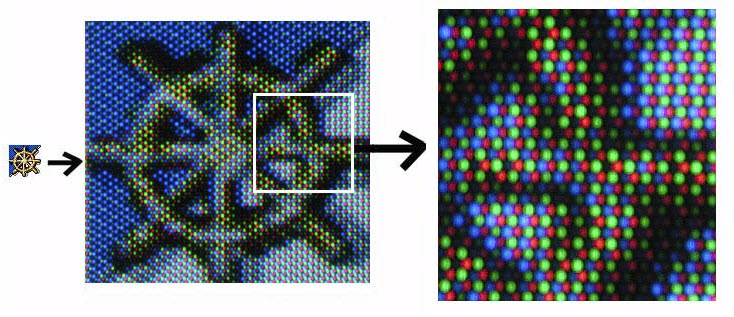

Some of the same issues apply when it comes to resolution. Twenty five years or so ago, I was very involved with high end CRT monitors and these always had a real problem in finding a specification that could genuinely express resolution and performance. Resolution was extremely difficult to define because although those that were not close to the technology thought it was all about the dot pitch of the CRT, the reality was that the actual resolution performance depended also on other factors. These included the shape and size of the CRT beam, the alignment of the three CRT beams and the ability of the amplifiers in the monitor to accurately control the intensity of the beams. In an extreme case, a very fine CRT beam could actually address the left and right hand side of each phosphor dot independently, so there was no coupling at all between the different components that defined the resolution.

CRT close up Image : http://www.infocellar.com/

CRT close up Image : http://www.infocellar.com/

This meant that makers (and my competitors at the time) would claim much higher resolutions than you would want to use, or saw performance drop off very rapidly as resolutions increased. It was very hard to explain how good our products were.

At the time, and after a lot of thought, I came to the conclusion that the only way to really specify the resolution was to quote the resolution and also the contrast between adjacent pixels at that resolution (which I called detail contrast). The idea was that monitor buyers would then be able to decide what level of detail contrast was needed for a particular application and set their ‘cut off’ for tenders and RFQs at that level. That would have really helped me to communicate the superior quality of my products to customers without having to get into side by side demonstrations (which we invariably won, in terms of image quality, but which took a lot of time).

Unfortunately, in those days, I was a “sharp end” in sales and marketing and really didn’t understand the way that the display metrology community and supply chain worked, so I wasn’t able to change things at all.

ICDM To the Rescue

The question of “what is the resolution” has continued to be an issue and especially since the introduction of RGBW and other non-RGB pixel structures, used to reduce power consumption and help with the introduction of OLED displays. Candice Brown Elliott (who developed a number of the different pixel structures wrote a couple of articles last year on this topic (The Display Resolution War – How much resolution is enough? and Resolved! No More Dot Counting!), and Florian Friedrich wrote an interesting Display Daily a few months ago about some of the issues on some TVs that are being sold as “UltraHD”. His tests showed that there are some disturbing artefacts on these panels under certain conditions. (Pseudo UHD: Watch Out for Ultra HD TVs with Reduced Resolution!)

The controversy on “what is resolution” may have now been resolved (pun intended) by a decision by the ICDM in May to adopt a modified method for defining resolution in the current IDMS standard for display measurement, as the previous version had been created with only RGB pixels in mind.

The key point about the new definition is that it states that the contrast (Michelson contrast in this case, which helps to avoid issues with displays where pixels can be completely off, such as OLEDs, and which then always show infinite contrast because you are, effectively, dividing by zero) has to be specified at the claimed resolution. “Pass and fail” limits (25% for video and 50% for computer monitors) are still available, but the contrast also has to be shown. That means, effectively, two numbers – the resolution and the contrast at that resolution

The current version will stay at place until a new version of the IDMS is produced. The next full version will further develop the testing to get beyond simple “pass/fail” tests to include colour. Insight Media has published a white paper, written by Michael Becker at Display Metrology & Systems (DM&S) in Germany, explaining these changes and which can be downloaded here (registration required).

Of course, just because the measurement procedures exist, it doesn’t mean it will be used. And even if it’s used, there’s even less certainty that the marketing departments of those disadvantaged by the new process will quote the data accurately!

Bob