At a standing-room only crowd of 500+ Display Week attendees, Intel’s display guru Achin Bhowmik stunned the crowd of mostly mechanical and electrical engineers, product managers and physicists, by asking them what significant event took place nearly 470 million years ago? He hinted that it was not only relevant to everyone in the room that day, but central to the evolution of the display industry going forward.

Why of course, the Cambrian explosion of the early Paleozoic era. Not a literal explosion but a rapid evolutionary expansion of life on Earth that included the first signs of light sensitivity in living organisms. It was in this geologic eon of time that the very beginnings of what culminated in Intel Short Course on Human visual system, note color sensors (cones) in human eye are in very narrow area with none in the periphery, Photo: Sechrist the complex human visual system millions of years (and genetic iterations) later. Now this is stuff the select group of engineers et al. at Display Week least expected, and are rarely exposed to. This, compounded by jet-lag from a recent 12 hour flight from Asia, plus a hearty post session lunch break, helped create a highly disrupted circadian rhythm for some session attendees. Suffice it to say, many in the group were begging for a nap, and a life / bio science lecture was just the thing to give it to them.

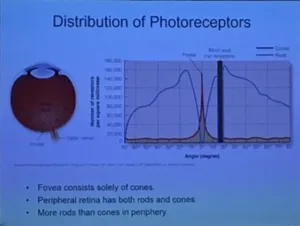

But Bhowmik pressed on, saying key display requirements based on the distribution of photoreceptors in the human eye (rods and cones – remember?) will help us reduce processing power by orders of magnitude, as we begin rendering images for just that part of the display the eye is looking at: Foveated rendering.

Bhowmik explained that the human fovia consists of only cones (color receptors) and rods; cones make up the periphery, with far more (orders of magnitude more) rods than cones in that space. Resolution and field of view were also discussed, with the assertion that we should be talking about pixels per degree (PPD) rather than PPI (per inch) specifications in HMD applications. He said the human eye has an angular resolution of 1/60 of a degree with each eye’s horizontal field of view (FOV) at 160° and vertical FOV of 175°.

As early as 2014, one such real world example came from the Fove headset from Japan, which according to Gizmag: “actually adjusts [the] focus of a scene by determining where in the 3D space the user is looking through tracking their gaze and parallax. This is where the Fove derives its name from, referring to both field of view (FOV) and the fovea centralis (generally known as the fovea), which is area of the retina responsible for sharp central vision.”

As early as 2014, one such real world example came from the Fove headset from Japan, which according to Gizmag: “actually adjusts [the] focus of a scene by determining where in the 3D space the user is looking through tracking their gaze and parallax. This is where the Fove derives its name from, referring to both field of view (FOV) and the fovea centralis (generally known as the fovea), which is area of the retina responsible for sharp central vision.”

Meanwhile back at Display Week, small booth Best in Show Winner DigiLens, founded by Jonathan Waldren, showed his version of the future, with display-rendered images targeting just where the fovea is directed, using a two-way display that includes gaze tracking. This fovea-rendered display system offers benefits of drastically reduced display power and latency that far exceed any other technology to date, and offers a significant benefit in AR and VR applications. So much so, according to Waldren, that the company has attracted key investment funds from Rockwell Collins. When a person’s gaze is constantly being tracked by a non-intrusive AR or VR system, it can feed that data to a system at very low latency with some remarkable and intuitive display interface results. Indeed this is early days; think Steve Jobs in the Xerox PARC lab seeing the mouse interface for the first time.

Waldren said his technology “…allows development of holographic system with an 8x higher index.” The coming world of AR VR (augmented and virtual reality) will gain an immeasurable boost from a low latency visual system that both delivers the image and knows exactly where the user is looking. The display not only shows specific content, but offers the possibility of discerning user intent, by tracking where the user is looking. The system can both augment and respond in a natural hands free way. And just as the mouse empowered a whole new graphic user interface, decades ago, reliable gaze and eye tracking technology have the potential to change everything yet again.

So, like all fundamental events of consequence that often come at the expense of extreme sacrifice, those who endured the hardships of fatigue (and overeating) and stayed with Bhowmik’s deep dive into biology and human physiology gained the benefits of no less than knowledge of the very future of the display industry as we know it; centered not on expanding resolution, speeds and feeds but the simplicity of targeting the complex human visual system, in all its evolutionary glory.

– Steve Sechrist