Our friends at Jon Peddie Research have released a white paper that summarises developments in GPUs in 2016 and argues that it is one of the best and most significant developments in our lifetime.

It can be downloaded here.

JPR said that there are just nine suppliers of GPUs and GPU designs, Qualcomm, Intel, Nvidia, and AMD design and manufacture GPUs, while ARM, Imagination, VeriSilicon, DMP, and ThinkSilicon offer GPU designs.

The GPU has evolved since its introduction in the late 1990s from a simple programmable geometry processor to an elaborate sea of 32-bit floating point processors running at multiple gigahertz speeds. The software supporting and exploiting the GPU, the programming tools, APIs, drivers, applications, and operating systems have also evolved in function, efficiency, and, unavoidably, complexity. The manufacturing of GPUs approaches science fiction with features below 10nm next year. They’re on a glide-path to 3nm, and some think even 1nm—Moore’s law is far from dead, but is getting trickier to tease out of the Genie’s bottle as we drive into subatomic realms that can only be modeled and not seen.

The notion of smaller platforms (e.g., mobile devices), or integrated graphics (e.g., CPU with GPU) “catching up” to desktop PC GPUs is “absurd”, said JPR. Moore’s law works for all silicon devices. Intel’s best integrated GPU today is capable of producing 1152 GFLOPS, which is almost equivalent to a 2010 desktop PC discrete GPU (i.e., 1300 GFLOPS).

We acquire 90% of the information we digest through our eyes. Naturally we need abundant sources of information-generating devices to supply our unquenchable appetite for information. And the machines we’re building have a similar demand for information, though not always, visual information. In some cases, such as in robots and autonomous vehicles that’s exactly what they need. The GPU can not only generate pixels, but it can also process photons captured by sensors.

Scalability is the other big advantage the GPU has over most processors. To date, there doesn’t seem to be an upper limit on how far a GPU can scale. The current crop of high-end GPUs has in excess of 3,000 32-bit floating-point processors, and the next generation will cross 5,000. That same design, if done properly, can be made with as few as two, or even one SIMD processor. The scalability of the GPU underscores the notion that one size does not fit all, nor does it have to. For example, Nvidia adopted a single GPU architecture for the Logan design in and used it in the Tegra 1. AMD used their Radeon GPUs in their APUs.

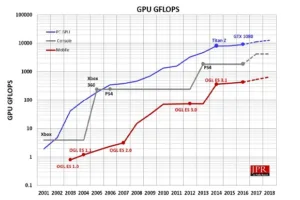

It’s probably safe to say the GPU is the most versatile, scalable, manufacturable, and powerful processor ever built. Nvidia, which claims to have invented the term GPU (they didn’t, 3Dlabs did in 1993, JPR said), built their first device with programmable transform and lighting capability in 1999, at 220nm. Since then the GPU, from all suppliers, has ridden the Moore’s law curve into ever smaller feature sizes, and in the process, delivered exponentially greater performance. Today’s high-end GPUs have over 15 billion transistors. The next generation is expected to feature as much as 32 GB with 2nd gen high-bandwidth memory (HBM2) VRAM and that will exceed 18 billion transistors.

GPUs have been one of the best, most significant developments in our lifetime, the firm concluded.