SMPTE organized a webinar conducted by Lars Borg, a principle scientist at Adobe, but who was also very active in the development of SMPTE standard ST-2094. In the webcast, Borg describes the various versions of dynamic metadata described in ST-2094 and why these will improve the viewing experience for HDR content.

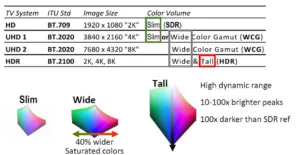

Borg started with some basics on High Dynamic Range (HDR), color volume and Wide Color Gamut (WCG). He came up with a very nice way to describe these concepts in terms of current ITU standards and TV systems as shown in the graphic below.

HD content has a slim color volume as the primaries are defined per BT.709 and luminance is limited. BT.2020 allows for wider color primaries and a wider color gamut using the bigger container of 2020 encoding, but the actual content can have a slim (i.e. HD color gamut) or something wider (WCG). The definition of WCG is not clearly defined but is generally accepted as something larger than BT.709. WCG displays are able to show more saturated colors but it says nothing about at what luminance level these deeply saturated colors can be displayed.

Color volume really kicks in with BT.2100 which defines HDR. Here, both PQ-based and HLG-based options are standardized. Borg’s nice addition to this discussion is adding the term “tall” to describe the color volume when HDR content is involved. This means taking into account the color gamut of the display over the range of luminance values. This volume, typically measured in CIELAB space, is bigger than typical UHD 1, UHD2 or HD content and becomes a valid way to characterize the performance of the display.

HDR Media Volume vs HDR Display Color Volume

Borg then made a distinction between HDR media color volume and HDR display color volume. “The color volume of PQ-based media extends from very low luminance levels to 10K nits and over the full BT.2020 RGB primaries,” explained Borg, “but a display’s color volume is likely to not cover the full RGB primaries of BT.2020 or reach 10K nits. The UHD Alliance Premium specification is for 90% of DCI-P3 and 1000 nits for LCDs and 540 nits for OLED HDR displays. Thus, the display color volume represents less than 10% of the media color volume.”

In terms of content creation, Borg noted that there are several ways to create HDR and SDR content today. One path creates the HDR first, then derives the SDR version. He thinks this is best for realtime workflows. A second does the SDR first, then the HDR grade, which means you focus on the vast majority of the users and revenue first, then add the ‘sizzle’ of HDR.

The third version does two independent grades. This latter is the highest quality but the most expensive path and can actually be difficult to do for the colorist, so is not always the first choice.

Borg then describes what he thinks is a hierarchy of HDR visual experience. At the lower end of this spectrum he placed HLG. This has no metadata with the dynamic range set at the camera. The dynamic range can now be scaled at the TV based upon its’ capabilities in the same way that SDR images are “stretched” today as well. This has the advantage of maintaining a consistent average brightness level across SDR and HDR content, but Borg thinks it may also change the skin tones.

Metadata Allows a Step-up

A step up is from HLG the HDR10 experience. This features the use of static metadata (per ST-2084). Ultra HD Blu-ray content and OTT HDR content is often delivered in this format. But the best experience is Dynamic Metadata for Color Volume Transform (DMCVT).

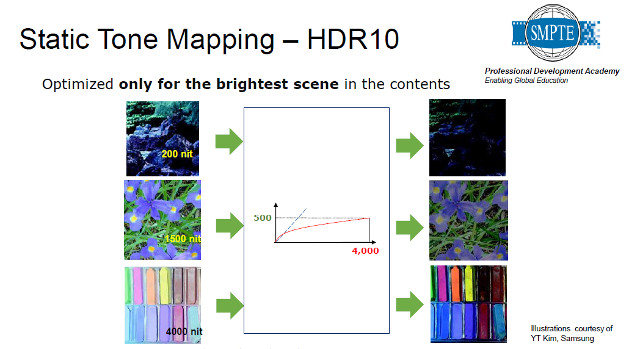

All these methods perform tone mapping at the display. Tone mapping is needed to transform the range of luminance values in the content to the available luminance values in the display. But how you do this has a big impact on image quality across a range of images.

The next two slides illustrate the concept nicely. With HDR10, the tone mapping is based upon the brightest scene in the media. In the example below, the content was graded on a 4000 nit monitor but played back on a 500 nit TV. The tone curve in this case tries to compress luminance values from perhaps 1000 to 4000 nits into a range of 300 to 500 nits to preserve those bright highlights. For very bright scenes, this works well, but in darker scenes, they appear too dim with loss of detail.

A different tone mapping curve can be adopted here that does not try to preserve as much highlight detail. This will clip some highlights but create a better mid tone and lowlight images.

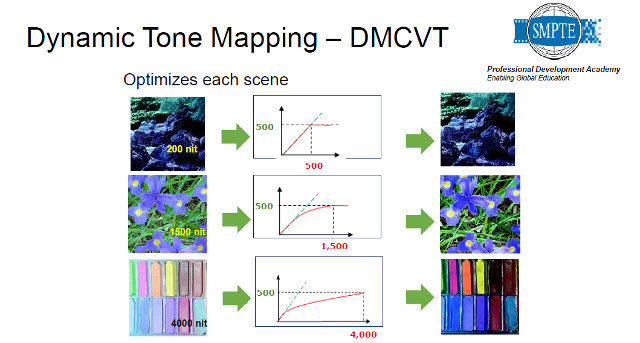

With DMCVT, a range of tone mapping curves can be implemented based upon the luminance levels in that particular scene. Notice in the graphic below how different the tone mapping curves are and the improved overall performance.

DMCVT also helps improve SDR content as well. He noted that if an SDR commercial comes on after HDR content, the TV must switch modes and will display the SDR content in a more confined color volume and luminance range. If the SDR content is tagged properly at distribution, then tone mapping can be used to expand the luminance range reducing jarring discontinuities in image consistency.

ST-2094 Has Options

Borg then described the basic outline of ST-2094, although he did not provide many details. This standard has six parts, of which five have been published with the sixth coming soon. Overall, it standardized the carriage of dynamic metadata from four different companies. The standard mostly focuses on the mechanism for the carriage and signaling of this dynamic metadata allowing each of the four companies to compete to gain market acceptance of their methods. The four companies and methods are:

- Dolby Labs (Parametric Tone Mapping) (ST-2094-10 App 1)

- Philips (Parameter-based Color Volume Reconstruction) (ST-2094-20 App 2)

- Technicolor (Reference-based Color Volume Mapping) (ST-2094-30 App 3)

- Samsung (Scene-based Color Volume Mapping) (ST-2094-40 App 4)

ST-2094-1 described the common metadata items and structures. The four methods above are then followed by ST-2094-2 KV which describes the key length value encoding and MFX container variables. This is the one that has yet to be approved.

ST-2094 allows for the carriage of multiple dynamic metadata “tracks”. That means that track 1 may be optimized for a 500 cd/m² final display while track 2 is for a 1000 cd/m² display, for example. Each track also specifies at over what time frame you apply the tone mapping and what curve to use as well. It even allows for the windowing of information so a single frame could have areas where different tone maps are applied. Borg noted that he knew of no one actually implementing this option, however.

Borg then showed slides giving some details on the four approaches, which are quite different in their methodology (and a longer discussion topic). The slides can be downloaded here.

Following the formal presentation, Borg fielded a question, noting that each of the dynamic metadata methods described in ST-2094 require their own processing and that no “transcodes” from one to the other exist. Content creators are unlikely to create four versions, so they will probably pick one for mastering and distribution. It will then be up to the set top box or TV makers to find ways to accept all four dynamic metadata formats and transcode between them – if they choose to do so.

Borg concluded by noting the development of dynamic metadata is still immature and that many changes are likely in the coming years. – CC