CableLabs, the development group funding by the cable industry, held their summer conference last week in Colorado. A number of topics were discussed, but I want to focus on their views of display use in the future.

The video below, called The Near Future, Ready for Anything, was produced specifically for the event and provides a compelling vision of interaction with displays. It takes the perspective of a child to give some relevance to the applications they will be using and how displays will be a central part of that experience. The message? Advanced 3D displays will become mainstream and require huge increases in network bandwidth, so the cable industry needs to start thinking about how it will meet these needs.

One key application: education. Here, students will wear glasses to visualize animation and text. There will be tablet and watch-based displays and transparent interactive displays. There may also be holodeck-like rooms for even more immersive interaction. And, there will be personal AI assistants to help students learn and explore wherever they go. Naturally, the Internet of Things will figure widely in the near future as well. There is also a vision of the workplace of the future with volumetric interactive displays and CAVE-like systems with virtual objects and avatars.

CableLabs sees future screens like this being used in education

CableLabs sees future screens like this being used in education

The CableLabs Near Future film series is designed to serve as inspiration for innovators. Why? Because the cable industry provides a huge chunk of broadband service and they want to remain relevant in the future. Even as 5G networks roll out, the backhaul will need massive broadband support, which can and will be provided by cable suppliers.

So how near is this future? CableLabs suggests many aspects of this future will roll out between three and eight years from now. Two of the presenters, Jon Karafin from Light Field Labs and Ryan Damm from Visby, talked about the light field ecosystem: from capture thru distribution to display. We had a chance to talk with Damm about the event and this vision of the near future.

Damm said he thought the video was very well done as it provides a good vision for the applications we will use, thus laying a foundation for why we want to build network capacity to support this. As for the time frame, Damm sees AR/VR applications starting to drive bandwidth in the next year. While the displays on these devices will not really be light field displays but more like multi-planar displays, they will render views from light field data. “This is a great way to start to develop the light field capture, processing and delivery ecosystem and understand the applications that can utilize this rich data set,” said Damm. “More complex implementations like videowall-scale light field display solutions are probably ten years out, however.”

Visby is focused on the capture and processing part of the light field ecosystem. In his presentation, he noted that true light field acquisition means capturing the light from a very large array of pinhole cameras with infinitesimal spacing. That is not practical so sparser capture systems are used with interpolation.

So how should you represent this light field data and compress it for distribution? The JPEG PLENO group is working to standardize sending a series of conventional video images but taking advantage of the massive redundancy to create huge compression.

Others are looking at what Damm described as Lumigraph approaches, which combine raw image data (2D images encoded as video or pixels) and volumetric data, usually polygons. Using polygons and textures is the technique used for the presentation of video games. This can work well from computer-generated content but will be too complex for live events and it does not work well with objects like smoke or clouds or with transparent or semi-transparent objects.

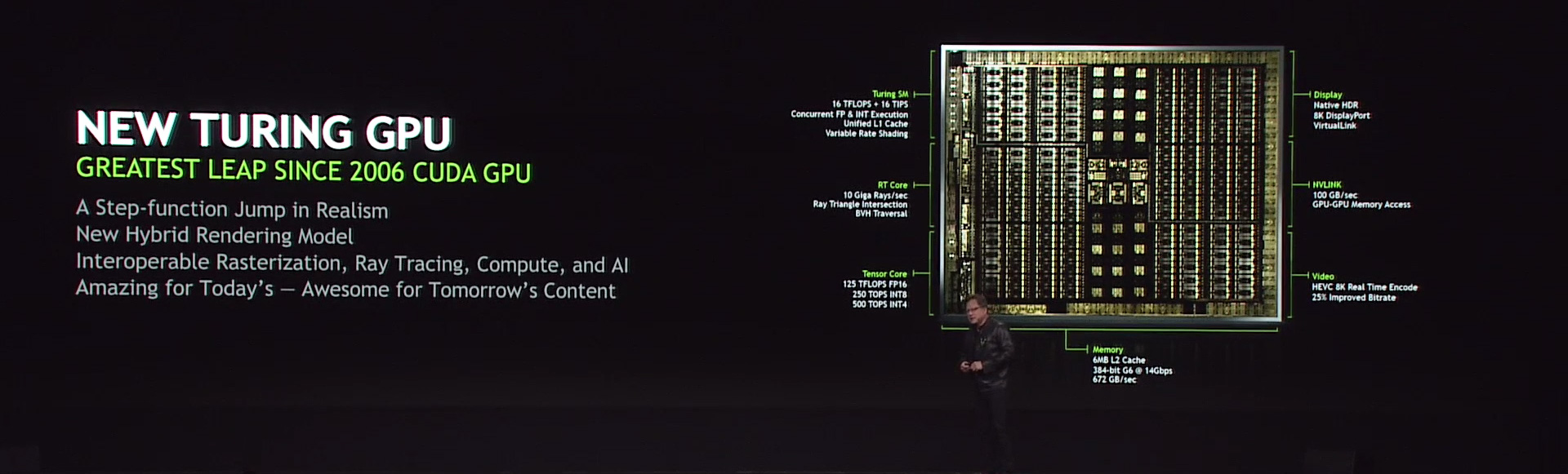

Light Field Labs / CableLabs are further looking at ‘real time’ solutions, where only polygons are carried around, and the rendering/lighting is deferred to the client device. This approach got a major boost at Siggraph this week when Nvidia CEO Jensen Huang, gave a keynote address. He unveiled Nvidia’s new Turing architecture GPU with a RT Core all packaged in a single GPU card called the Quadro RTX. This is the first real time ray tracing GPU, he claimed, offering the ability to do 10 gigarays of rendering per second (see full talk here: https://videocardz.com/77245/watch-nvidia-jensen-huang-keynote-at-siggraph-2018-here)

Why is this so important? First ray tracing is the way to create image that are photo-realistic as they model rays in a complex manner. For example, if a light shines on a wall, some of that light is reflected back into the room. That light may alter a shadow or reflect off another surface to add more illumination to an object. Understanding how all these light rays bounce around the room to create the final illumination is an extremely complex process, which is why it has not been a real time solution to date, so this is a major breakthrough in processing power.

But in addition, Nvidia will also develop a full materials library with BRDF (bidirection reflectance distribution function) data which means these materials will react like real materials with diffuse and specular reflections, plus opaque and translucent properties as well. The engine support area lights with soft shadows in super high fidelity (8K and HDR). A number of clips showed photo realistic images that can be rendered and changed in real time. This is essentially the light field solution in a real time engine that LFL / CableLabs have been advocating.

While this seems like a big breakthrough, Damm stills thinks this whole approach has limitations and so will eventually become a transitional approach to something different. For example, if you want to transmit a sporting event as a live light field broadcast, you would have to create very detailed models of the sports stadium, players and materials and download all that to the consumer prior to the sporting game. Damm does not see this as a practical solution, so they are working on a more fundamental light field codec that is not based on discrete cosine transforms of images. He says they have proven that it works with today’s technology and can deliver light field data at reasonable data rates (100-200 Mbps). He will need another year or two to develop it further, however.

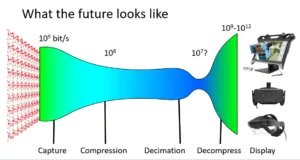

Meanwhile, CableLabs has looked at the bandwidth of a fairly small 2’x2’ light field display and determined a data rate of 800 Mbps would be needed. But that is uncompressed, so some saving can come with compression. That’s where Visby comes in as it is working on the whole pipeline. Damm’s bandwidth pipeline is shown below for light field data.

Many believe the march toward higher resolution, wider dynamic range and multi-dimensional display is inevitable. And they will require more bandwidth, which is why the cable industry is thinking about this future.