Dr. C. E. Thomas, Founder and CTO of Third Dimension Technologies (TDT) recently gave a SMPTE webinar for members titled “Streaming Model for Field of Light Displays.” Field of Light Displays (FoLD) are what most others call Light Field Displays (LFD). For this Display Daily, however, I will use Dr. Thomas’s acronym.

The SMPTE webinar focused not on the displays themselves, but the technology needed to stream real-time field of light video with synchronized sound over more-or-less ordinary network connections.

TDT Flight Simulator with a field of light display. The image on the screen was captured from a real FoLD by a camera inside the pilot’s head-box, then photoshopped into this off-axis image. The image would not be visible to a person in this position. (Credit: Third Dimension Technologies)

TDT develops and manufactures FoLDs based on multiple projection systems and a diffusing screen. Unfortunately, few details are available on TDT’s display technology, either from the company’s website or this webinar. Dr. Thomas did say:

- Array of light engines [e.g. pico- or micro-projectors] illuminate diffusion screen to produce multiple angular perspective views of a scene. The diffusing screen has about 1°H x 90°V diffusion angles.

- Horizontal Parallax Only (HPO) reduces computational requirement (human eyes are horizontally separated!)

- At least three overlaid images rendered from different direction into each eye (three angular views projected into each eye)

- Around 2.0 arc minutes per pixel (assumes 1280 different pixels into each eye and fusion by the brain into a higher resolution)

- Projection-based technology scalable to large screen size

The company has developed a flight simulator system based on the technology under a 2017 Air Force STTR project. It has horizontal-only parallax, although Dr. Thomas says that the system could be extended to vertical parallax, if needed. The glasses-free 3D trainer came out of this project, as shown in the photo, which the company says can be customized so it will apply to any airplane.

One of the issues with Field of Light Displays is the immense amount of data required to make them work correctly and show the desired image. This results in very high storage requirements, very high processing power (multiple teraflops) and very high bandwidth. This data is needed to drive all the system’s pixels – I had never heard anyone use the word “Terapixels” until I heard it from Dr. Thomas. This volume of data is a problem not just for TDT but for all the many other companies developing similar light field technologies. Besides TDT, Dr. Thomas mentioned Zebra Imaging, FoVI3D, TIPD, Holochip, Ostendo, Avalon Holographics and Light Field Labs as being active in the FoLD business.

Summit, Oak Ridge National Laboratory’s new 200 Petaflop (200,000 Teraflop) computer became operational in 2018. (Credit: Oak Ridge National Laboratory)

Summit, Oak Ridge National Laboratory’s new 200 Petaflop (200,000 Teraflop) computer became operational in 2018. (Credit: Oak Ridge National Laboratory)

Dr. Thomas used as a target a good consumer internet connection which, at least nominally, is about 1Gbps now and is expected to rise to about 10Gbps in 2024. Currently, TDT is working on this problem along with the Oak Ridge National Laboratory (ORNL) in a project managed by the Air Force Research Laboratory (AFRL). ORNL is a natural partner in this project, in part because they have a new computer capable of 200 Petaflops or 200,000 trillion calculations per second. FoLDs don’t typically need quite this much computing power, but they do need multiple teraflops of processing power for the display to have useful size, resolution and frame rate.

The title of the project is “Open Standard for Display Agnostic 3D Streaming” (DA3DS). One of the main goals of the project was to develop a standard that will allow streaming the data needed to show real time video on a FoLD, with synchronized audio. The project is scheduled to end and produce its final report by December 18, 2019. Dr. Thomas said in the webinar that he wasn’t sure if the final report would be made public or not.

As part of the project, Insight Media (i.e. Display Daily’s Chris Chinnock) has organized Streaming Media Field of Light Display (SMFoLD) industry workshops to examine this problem. Among other things, TDT, ORNL and the Air Force got inputs from other companies on how to approach the streaming problem for FoLDs. The 2016 and 2017 workshops were sponsored by the Air Force so the proceedings presentations can be downloaded for free.

The 2018 workshop was sponsored by Insight Media so the abstracts are free but the full proceedings must be purchased. The proceedings for all three workshops are available through the SMFoLD.org website. Chris Chinnock told me this is not an ongoing event – 2018 was the last SMFoLD workshop. He added that the 2019 Display Summit was all about light field and holographic displays and the proceedings can be purchased through the Display Summit website.

Promoter Members of the Khronos Group. (Credit: Khronos Group.)

Promoter Members of the Khronos Group. (Credit: Khronos Group.)

Streaming the data needed for FoLDs as images is simply not possible with today’s video streaming technology. The DA3DS project has taken the approach of transmitting not the images but the OpenGL primitive graphics calls over the network along with the data needed by the OpenGL calls. OpenGL is an application programming interface (API) from the Khronos Group. Current Promoter Members of the Khronos Group are shown in the image, a list of all 163 members is available Here. The group produces royalty-free open standards for 3D graphics, virtual and augmented reality, parallel computing, neural networks, and vision processing. Previously, I had written about their OpenXR API for AR and VR applications. The display issues associated with AR and VR are, in fact, very similar to the issues associated with FoLD video.

OpenGL is a well-established standard and is in very common use. You do not need to be a Khronos member to use OpenGL because the source code is available to all for free on GitHub. Since one of the goals of the SMFoLD project was to produce an open standard for streaming not just to field of light displays but to stream to all display types with the same data stream, including 2D, stereoscopic 3D, multi-view autostereoscopic 3D and volumetric displays that are not FoLDs, choosing the OpenGL API makes a lot of sense. Not only is it open source, but a wide variety of programmers are familiar with it and provide a talent pool for FoLD projects. One question asked by the audience at Dr. Thomas’s webinar was would the SMFoLD project support other APIs from other organizations. Dr Thomas said that, for now, the answer was no and the final report, due out, coincidentally, the day this DD was published, would only deal with OpenGL. He did not exclude the possibility that future extensions of the project would allow other APIs.

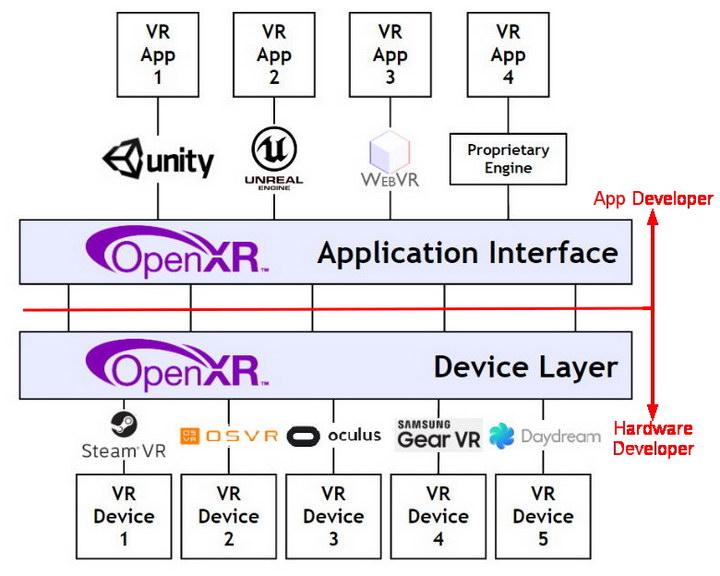

Operation of an API in a VR/AR environment. (Credit: Khronos Group with edits by M. Brennesholtz)

Operation of an API in a VR/AR environment. (Credit: Khronos Group with edits by M. Brennesholtz)

An API, when used in a display application, allows the content creators and the display system designers to be completely independent of each other. The military uses many sources of information, including both 2D and 3D images and data. The image sources include many different file formats. At the display end, the displays involve many different technologies, have different requirements for inputs and different drive techniques. Thus, this separation of the content creation and the display is important to the military.

The operation of a system like this is shown in the image for an AR/VR application using the OpenXR API, also from Khronos. Before the OpenXR API was developed, it was necessary for every game creator to develop a different software package for each VR HMD it wanted its game to run on. For example, a game developer using the Unreal Engine who wanted his game to run on both the Oculus and the Samsung Gear VR HMDs would have to write his game twice – once for Oculus and once for Samsung. In this case, owners of Stream VR, OSVR and Daydream HMDs would be out of luck and couldn’t play the game. This is bad for everyone – game players want to play all available games, HMD makers want to provide the maximum number of games to their customers and game developers want their software to run on all HMDs without the need for HMD-specific re-writes.

When an API is used, the software and other content developers output via calls to the API Application interface. For HMDs, the application interface would be OpenXR while for SMFoLD, the application interface would be OpenGL. The display developer does not need to know the original source of the content, he just needs to know the output he receives and must display is compatible with the OpenXR or OpenGL API. The hardware and software driving the display must then decode these API function calls and render the images for the display.

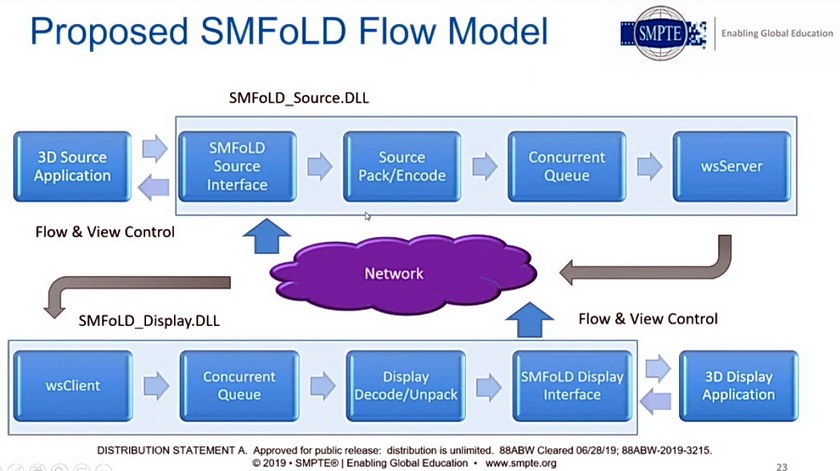

Proposed SMFoLD workflow from 3D source to FoLD or other 3D display. (Credit: Dr. Thomas of Third Dimension Technologies)

Proposed SMFoLD workflow from 3D source to FoLD or other 3D display. (Credit: Dr. Thomas of Third Dimension Technologies)

For the SMFoLD project, what is transmitted over the network is not the images but the OpenGL function calls and the object data these function calls need. OpenGL is an object-oriented system and an object can be reused many times in the final video. The API function calls tell the display exactly how to render each object in a frame. This includes position, size and rotation of the object, how the object is lit and how the object is obscured by other objects in the scene. According to Dr. Thomas, the API calls and the data to support them can be quite compact and normally can be transmitted in real time over ordinary networks. Data for an object can be transmitted once and stored locally at the display so it doesn’t need to be retransmitted with every frame of the video. The multiple teraflop-class computer is needed at the first and last steps of this diagram. The “3D Source Application” takes the input data from whatever source and converts it to OpenGL calls. The “3D Display Application” takes the OpenGL calls and renders them for the target display.

“Render” is the operative word here. The military and other users of FoLDs want real-time video. Rendering images from an API is compute-intensive and cannot normally be done on a simple GPU. Instead, it takes a multiple teraflop-class computer at the display to render each frame of the video image at the display. For a FoLD, this is not a serious problem because typically a field of light display requires a multiple teraflop-class computer at the display anyway. For 2D displays, and the vast majority of displays in the military are, in fact, 2D, a teraflop-class computer at each of the military’s millions of displays is out of the question. Even stereoscopic or multi-view 3D displays do not normally require this level of computer support. So while the OpenGL approach to sending FoLD images over the network nominally meets the goal of allowing 2D and regular 3D displays to use the same data, it is not a realistic approach in practice except for FoLD and other volumetric displays.

Another problem with the rendering approach is even on a multiple teraflop-class computer, the time to transmit and the render each frame of the video varies from frame to frame. For example, in a scene change, all the data for all the objects in the new scene must be transmitted to the destination since the objects have not been used before. Then they all need to be rendered for the first time. Doing all this in 33mS would probably require a petaflop-class computer, not an “ordinary” multiple teraflop-class computer.

One of the big effects of variable frame rate is on the accompanying synchronized audio. One suggestion is to transmit the audio as a separate data stream, not embedded. The audio stream would contain time stamp data to make sure it stayed synchronized with the video. Other approaches to audio/video synchronization are also being considered.

While this appears to be a promising approach, it still needs work to make it a really practical solution for multiple display types. Of course, FoLD displays themselves need considerable work before they become practical, either. Hopefully the FoLD displays and the transmission scheme will be done in time for each other. The Air Force Research Laboratory plans to present the transmission scheme to a regular standards body to be formalized. Since SMPTE not only presented Dr. Thomas’s webinar, it is also the main standards body for video transmission formats, it is entirely possible the AFRL will choose to submit the system to SMPTE for standardization. – Matthew Brennesholtz