The keynotes took place on the first morning of the opening day of the symposium and the first speaker was Brian Krzanich, CEO of Intel who spoke about the Intel Realsense technology.

He said he would cover three things – how interactions with devices have evolved – up to now it has been a two dimensional world and Intel wants to make it more interactive. He promised to show what is in the lab.

How have devices evolved over the years, he asked? This is the fiftieth birthday of Moore’s Law – an astonishing period of development. Computing has become smaller, more personal and connected. Those three trends are the key drivers of this period. Computing is now at a very personal level. Kraznich showed the computing button that he talked about at IFA called Curie, but didn’t give more detail.

Displays have evolved, and our interactions with those displays have evolved from command lines to GUI data entry, but he sees current interactions as minimal. Even with a 3D display, the interaction is basically 2D.

Touch displays have changed things, and once you get used to one, you don’t want to go back, but the interaction is still two dimensional. How can you make the computer see and hear more like a human, he asked? Humans always interact in 3D – even your hearing understands space and position. Devices should be able to see and hear and adding that capability should start a new phase in computing.

Speech recognition is already here with huge advances over the last two years, but it hasn’t taken off because, in Krzanich’s view, the audio is not reinforced with video.

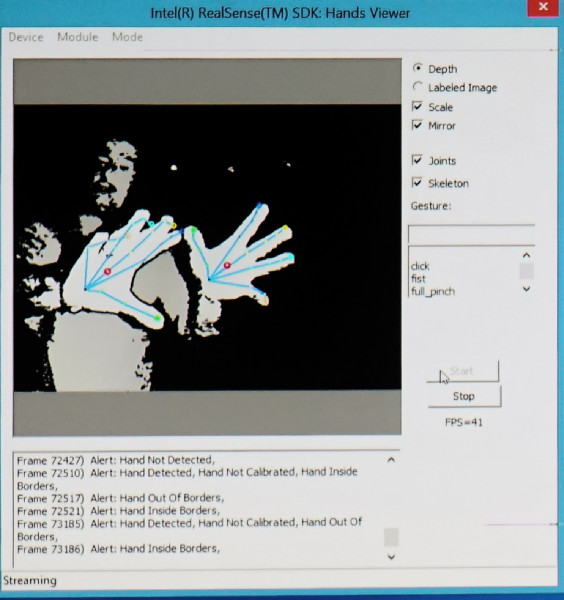

Krzanich then spoke about the Intel RealSense technology. The latest version is just 3.75mm thick, but the technology is still at an early stage. He demonstrated how the system can recognise gestures and facial expressions. The APIs to access the data are free and downloadable from www.intel.com/realsense

There are over 30 apps already for use. One he likes is a cooking app that can be controlled without touching a display – ideal for getting cooking instructions.

A demonstration showed how a person could be shown on screen and reacting with content, without the image taking up “a big square”. That’s much more natural. For example, an image of the person could be shown over a Powerpoint allowing the presenter to react with the content.

Intel realsense allows this ‘blue screen’ effect of the person on the bottom left

Intel realsense allows this ‘blue screen’ effect of the person on the bottom left

In professional applications, Intel has been working with jd.com which is a warehousing company. The firm used to measure all pallets coming in to its warehouse. It used to take three minutes to measure a delivery item, but it now takes 10 seconds or so using RealSense.

Intel is also working with a German drone company, Ascending Technologies, to use a RealSense camera on a drone and using it to steer through forests at 12 mph. Intel is working on making that even faster. 2D sight is simply not good enough to support this kind of flying application.

Turning to 3D printing, which is a growing application, Krzanich showed that in around 30 seconds, a 3D scanner can scan a person and the data can be easily used for creating 3D objects.

The 3D data can be also be used to create an avatar that can be used in a game. That will change the gaming experience allowing friends to replace characters in them.

Content can be edited in 3D and Intel showed technology from zSpace (we reported on this at CES HP to Sell zSpace 3D Monitor – adds 5K and Curved Models). Multiple people with network connections can react with virtual objects using the zSpace display, which includes RealSense technology.

A hot buzzword is augmented reality but up to now, this has mostly been in 2D. “Toys” is an Intel development project and there was a demonstration of how virtual objects can interact with real spaces, blending real and virtual objects, with the combined image being seen on a tablet display.

Intel’s toy project merges real and virtual objects

Intel’s toy project merges real and virtual objects

Systems can also understand what the user is doing and help them

RealSense is now being integrated into phones in R&D and will take this kind of virtual experience into the whole world, outside the home and office.

Turning to the future, Krzanich said that there is an interest in projecting images into the air. Intel is working with a company to create virtual images that can be interacted with.

Intel believes that the technology can be exploited by the entire industry ecosystem. The original idea was just to enhance PC interaction, but increasingly the RealSense technology is “expanding outwards”. Even smartwatches and AR glasses will need this kind of depth understanding.