The Future Media Lab from NYU Engineering profiled their live VR performance of Hamlet during this session and demonstrated it in the nearby demo area. This may be the first demonstration of this kind.

The idea is to create a virtual set for the performance of Hamlet. The user at the Exploring Future Reality workshop wears a headset and is an observer in the performance. They are able to move around the set and listen to the actors. The platform they use is called M3diate and allows up to 15 audience member to perceive each other as they explore the virtual Elsinore castle together. It also includes spatialized audio, which means, for example, that the sound of the sea is fixed in the 3D space regardless of what direction the audience member is looking, which I confirmed first hand.

Hamlet and his ghost are played by real actors who are connected into the virtual world from their studio in Brooklyn. Each actor is wearing a full body suit outfitted with reflectors. Emitters in the studio illuminate the space and sensor pick up the reflected sensors and triangular their location in 3D space.

What is novel about this motion capture system is that the coordinates of each reflector were transmitted from Brooklyn to Manhattan where they are input to the game engine running the virtual Hamlet performance. The coordinates are inputs to the virtual Hamlet and his ghost and create their avatars in virtual space. In theory, the participant in Manhattan can then interact with the actors as they see the Manhattan user as an avatar in their virtual world. The Manhattan user has a Vive HMD with some sensors and a more primitive tracking system as well, so at least the Brooklyn actors know where the Manhattan user is in the virtual set and where they are looking. All this can be done in a low bandwidth link with a 2 ms delay!

Researchers say they are now working on more advanced algorithms to take this HMD data and turn it into a full body avatar.

Obsess is for Shopping

Obsess is a VR shopping application that creates a virtual high end shopping experience. Users can browse the store, look at items and order in the virtual world. Women view shopping as entertainment, and current web-based shopping offers the same experience whether buying a $1500 dress or a $5 toothbrush. The company wants to create luxury shopping experiences that are beautiful, interactive and immersive. They have developed the tools to allow retailers, high end brands and editors to create their own virtual showroom.

OneBook is an idea from a company called Phylosfy which offers, as the name implies, “the only book you need to own.” The company says that many people still love the feel of a real book and the turning of pages.

In their vision, each page is encoded with a bar code that allows 2D or even 3D images to appear on each page. While the idea sounds interesting, they presented no details on how it works, which may be problematic. For example, how do you change the bar code on each page? Secondly, to turn the barcode into an image you will need to wear some glasses with a camera, display and some processing power – in other words, mixed reality glasses. Will users wear these glasses to read the old fashioned way?

Datavized Visualizing Big Data

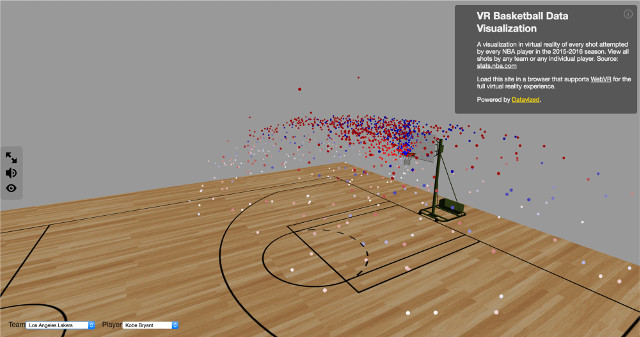

Datavized wants to “bring visualization to life” by building immersive visualization software tools for the emerging WebVR capabilities. In other words, they want to use VR headsets to allow professionals to view big data needs in a browser. Datavized is a “Software as a Service” platform that uses the JavaScript API developed by Mozilla to deliver 2D and 3D VR images in a browser. In October, at the W3C meeting in San Jose, Google, Mozilla, Microsoft, and Oculus announced they will support WebVR browser integration, opening the door for wider adoption.

To illustrate how this might be useful, co-founder Debra Anderson showed a point cloud representation of basketball shots that can be viewed in a 3D HMD with the ability to navigate the 3D space to understand patterns.

Geopipe Wants to Copy the Real World

Geopipe is focused on world building tools and sees opportunities in architecture, media and VFX, simulation and game design. Its goal is to create virtual copies of the real world built by algorithms. This will save time and money and allow more control for users.

This is another big data problem and Geopipe’s initial focus will be on streamlining the architectural rendering problem, where models can take 3-8 weeks to generate. Geopipe uses an array of assets to build the city blocks for the architect in much less time.

Lampix Transforms Work Surfaces

Lampix is offering a collaborative tool that can best be described as an interactive down-looking projector packed in a lamp. Their mission is to transform ordinary work surfaces into smart surfaces.

Lampix CEO, George Popescu, said they have already installed units with Bloomberg where the projector’s utility as a collaborative decision making tool is being evaluated. The system can scan and synchronize documents, allow collaboration and annotation with pen/stylus tools – even real pens, plus it allows finger and hand gesture interaction.

The company sees applications in offices, retail, education, healthcare, manufacturing and more. Following the Bloomberg and other potential pilot evaluation sites, first production prototypes are expected next spring.

VirtualApt Robots Capture 360º Video

VirtualApt develops robots to capture 360º video. The benefit of this type of capture is the ability to move about the captured video world instead of using a fixed location perspective. This allows the creation of guided tours. The company then described some of the hardware and software integrated into its robots and showed some video of the robots in action. They see applications in real estate, retail and tourism.

WebVR Helps with Advert Insertion

A second company focused on WebVR was called WheresMyMedia. They are developing tools to help in the VR content creation process and have modules to help with advertising insertion in the VR video, editing and overlay insertion and workflow optimization.

Trio Mount is developing a new VR camera rig that is quite innovative. It is designed to solve cost and size issues with many of the current 360º capture rigs. To illustrate this, the speaker noted that the costs of 14 Hero GoPro cameras and a 360 Rized rig amounts to $5899 and weighs 15 pounds (6.8 Kg). With the trio mount and three Ricoh Theta (dual fisheye) cameras, the costs is only $2,049 with a weight of just 3 pounds (1.36 Kg).

The developer is a film maker, so he is not interested in commercializing the design, but he is interested in licensing (go to www.trio.camera.com).

MedVis Brings Visualization to Medicine

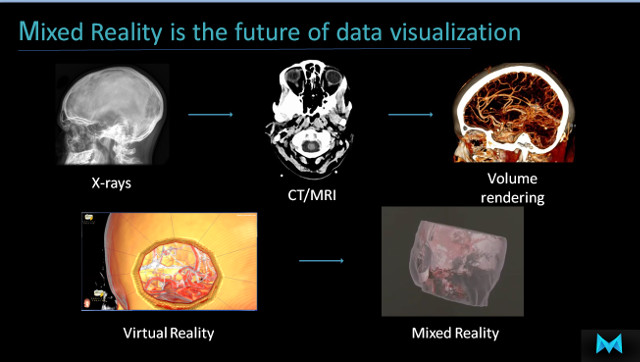

MediVis is focused on advanced visualization for the medical community. Dr. Osamah Choudhry is a neurosurgeon and he believes – and studies have shown – that 3D visualization of medical data is superior to 2D representations, but adoption has been very slow. There is huge resistance by the medical community to change from the way they were trained.

Choudhry sees mixed reality as the future of medicine and he has formed a company as a joint venture with NYU Medical Center. They want to visualize any medical data in 3D.

Their first target application is spinal surgery and they hope to go into clinical trials in 2017 using a mixed reality platform and cloud-based rendering platform. He was not specific on the details of this platform, however. Othopedics and neurosurgery could then follow.