RealD has turned to signal processing techniques to create a novel way of processing frames of video. Called a synthetic shutter, the technique can be used with high frame rate capture and a shutter shape than can vary from the traditional square wave to create a continuous variety of moving images at various frame rates.

Tony Davis presented a paper in which he started by noting that frame rate conversion is difficult and introduces temporal artifacts that are often unacceptable. The best way to solve this problem is to acquire content at a high frame rate and create derivative frame rate versions from this master – the same way resolution is handled today.

In quick panning shots, the background appears choppy. Car wheels appear to spin backwards, picket fences and brick walls hop across the screen, and helicopter blades look wrong. Lights in the scene flicker and snow blows across the scene in unreal dashed-lines. This is all the result of judder (a consequence of how content is captured) and strobing (a consequence of how the content is displayed or projected). Fixing one of these does not solve the problem – both must be addressed simultaneously. Note that one can have judder and strobing issues regardless of frame rate.

For the most part, triple flashing in cinema projectors has solved the strobing issues, so it makes more sense to focus on the judder issues created with content capture.

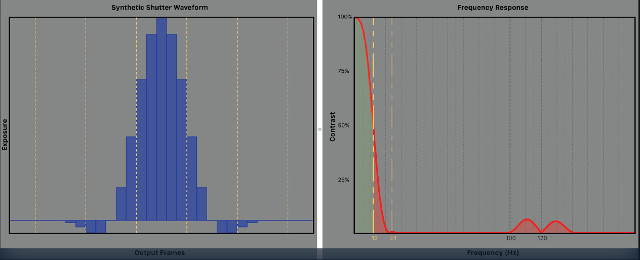

Davis laid out an excellent description of Nyquist theory and how this relates to shutter angles in motion picture capture. For example, with a 24 fps capture using a 180-degree shutter angle, the Nyquist frequency is 12 Hz. Any motion above 12 Hz cannot be faithfully reproduced so aliasing will begin to occur. In addition, the contrast of objects moving above the Nyquist frequency begins to decrease as well. This is standard signal processing theory. The figure below shows the typical 180-degree, 24 fps capture system along with the MTF (Fourier transform of the square wave). Note all the high frequency information that is above the Nyquist frequency (dotted line) that will contribute to judder.

So what happens as you increase the shutter angle or change the sampling frequency? If you increase the shutter angle, “the ratio of aliased signal to baseband signal is improved (by reducing the aliasing), but the contrast response in the baseband signal is reduced, resulting in softer looking motion.” A wider shutter angle means a longer exposure time, so you will get more motion blur.

A big problem that RealD is looking at is frame rate conversion. Going from 50 Hz to 59.94 Hz is extremely difficult. To do it today, optical flow methods are used that track objects from frame to frame to try to reduce the effects of judder from this low sampling rate. These algorithms work, but require a lot of supervision and manual intervention to fix errors. RealD postulates that if you can reduce judder noise in the original signal, then much simpler, and perhaps automated, frame rate conversion techniques can be used.

One way to reduce shutter is to increase the frame rate, but very high frame rates would be needed for meaningful reduction, says Davis. The better method is to change the square shutter shape used today. The square shape is an artifact of the days of mechanical film projection. Square wave shutters have very poor low pass filter characteristics, so they allow an enormous amount of high frequency information through when sampling, which becomes judder. With modern signal processing technologies, it is time to abandon this approach, claims Davis, and change the shutter shape to non-square.

The idea behind the synthetic shutter is to capture with a 360-degree shutter angle at a higher frame rate and then down sample to a lower frame rate, applying a digital filter (synthetic shutter) that removes the aliasing and judder between the capture Nyquist frequency (half the capture frame rate) and the down sampled Nyquist frequency. The bigger the difference in these two frame rates, the more judder is removed.

The synthetic shutter corresponds to a theoretical camera acquiring at the lower frame rate and is implemented mathematically, which means it can have negative values and extend beyond a single original frame. In signal processing, this is a simple finite impulse response filter that can be implemented very efficiently.

According to the analysis by Davis, in order to achieve a “through the window” realism to motion capture, the camera frame rate would need to be 1200 fps and the projection system run at 240 fps. Obviously, this is impractical today.

As a result, Davis presented a tool that allows the content creator to create different waveforms for the synthetic shutter to achieve the artistic effect they are looking for. He recommends acquiring content at a 360-degree shutter angle and 120 fps or higher. In post production, the synthetic shutter can be applied to create choppy or soft, cinematic looks. And, creating derivative versions like 24, 25 or 29.97 fps can be done easily with minimal judder.

The figure below shows one synthetic waveform and the resulting MTF. Note the huge reduction in information above the Nyquist frequency, meaning that there will be little judder in this image. Davis also noted that this synthetic shutter is already being used by the industry – specifically on the new Ang Lee film which has been shown in 4K at 120 fps, but which will need to have many derivative versions at lower frame rates – but delivering a similar aesthetic. – CC