New at this years SID Display Week was the special coverage of vehicle displays. Long a niche market for LCD fabs, the space is still dominated by the top automotive component integrators like Continental, Rockwell Collins, and Harman International, who can ruggedize the displays and support them for long product life cycles needed to meet car maker’s warranty and parts supply requirements. But the volumes have been growing over the years, along with other technology being added to vehicles to improve safety and efficiency.

SID hosted a special Automotive display track during the symposium at Display Week. This included auto display applications and systems (session 41), next generation auto display technologies, with a focus on new HUD systems, (session 47). Touch interactivity and Human Machine interface was covered in session 53 and session 59 on flexible curved coatings that included a paper on rollable displays using oxide TFTs (Session 59.2).

Invited paper 41-3 by Paul Russo is a great place to start, as it looked at Megatrends Driving Auto Displays. Here Russo covered the history of auto displays starting with the lone speedometer, (Continental in Germany was one of the first suppliers) used in vehicles that didn’t even have a gas gauge. To Russo, LCDs and HUDs have very distinct applications in the vehicle with the former serving mostly as a distraction to primary driving activities, and the latter used to augment the drivers primary tasks with critical vehicle information and safety alerts. Data types including active (collision avoidance) vs. passive (rear view camera feed), real-time (safety) vs. non real-time and even video streams were all discussed in his paper. Russo’s 10 year outlook for auto displays includes continued expansion of displays to accommodate autonomous vehicles and connected cars interfacing with both each other and the internet of things (IoT).

On the HUD side Russo identifies a big issue accommodating the unique windscreen geometries in the vast number of cars, making standardized components (and price reduction) a challenge. The use of geometric processing can be used here to reduce cost and (when added to standard windscreen sizes) can open the door to mass HUD deployments in vehicles going forward.

Possibly one of the most novel display technologies targeting automotive comes from Sun Innovations, with its full windshield HUD in color. It’s a novel emissive projection display (EPD for short) technology, used to create a full windshield heads-up display, with “unlimited viewing angles.” Sun said it can be applied to any glass window (not limited to auto) and can also be applied to convert any surface to a high quality emissive display, without hiding or affecting the surface appearance. In his session paper (47.4) Sun describes photo- quality images on fully transparent RGB emissive screen, after selective excitation of the screen by images in multiple UV-Vis. wavebands from projector. He said information can be displayed anywhere on the windscreen (or any other window in the car) and when you factor in sensor integration to the augmented reality mix, the possibilities seem unlimited.

Sun’s technologies uses projective excitation on luminescent materials (made in optically clear sheets <50 micron). From this he stacks these color sensitive films (RGB) and excites them using a display engine powered with a UV light source. He calls the process “wavelength selective excitation” (WSE) with distinctive absorption and emission characteristics. In principle, the projector encodes the original color image into 3 excitation wavebands, which excites the corresponding film layer, generating the RGB. The trick is to do this without interfering with the excitation or emission from other 2 layers. Best yet, Sun said these films can be produced in a roll-to-roll process that is low cost and haze free (no pixel structures to interfere with light transmissivity). If this sounds like a holy grail in auto display, we agree.

Details of HUD implementation were discussed in Session 47.3 by Qualcomm‘s Mainak Biswas. He makes the case for Augmented Reality (AR HUDs) that offer the advantage of displaying information without requiring the driver to divert eyes from the road. But the technology is going much further than that. The distinction between “screen-fixed” and “world-fixed” HUDs is made with the former used as a primary source of passive data like vehicle speed and fuel levels. World-fixed HUDs perceptually appear to be “aligned with the real environment,” and can be used to show specifically where to turn, for example, or other real-world object of interest like highlighting break lights on the car just ahead.

World-fixed AR HUD system showing vehicle and other critical data detection (a) and overlaying the contextual information (brake lights) on vehicle ahead to alert driver in bright sunlit environment This added display information provides what some characterizes as cognition off-loading allowing the driver to gain valuable information from the display without having to divert thinking cycles to translate the information. One example of this is overlaying an image of a right turn arrow directly on the oncoming street aligned with the exact position of the street as seen through the windscreen. This real-time information requires far less cognition than translating a turn indicated on a 2D map image displayed on a GPS screen in the center stack LCD.

World-fixed AR HUD system showing vehicle and other critical data detection (a) and overlaying the contextual information (brake lights) on vehicle ahead to alert driver in bright sunlit environment This added display information provides what some characterizes as cognition off-loading allowing the driver to gain valuable information from the display without having to divert thinking cycles to translate the information. One example of this is overlaying an image of a right turn arrow directly on the oncoming street aligned with the exact position of the street as seen through the windscreen. This real-time information requires far less cognition than translating a turn indicated on a 2D map image displayed on a GPS screen in the center stack LCD.

Biswas’ paper discusses paths to getting there including creating an accurate model of the picture generation process for proper visualization of the 3D content that is perspective accurate from the user view point. This requires a camera calibration algorithm to accommodate both view independent spatial geometry and view dependent perspective transformation. Other computer vision techniques are used to compensate for distortion of both optics and combiners used in the light path. The use of a front facing camera and head tracking helps determine the driver’s point of view, and this is just the technology in the car.

Outside the vehicle, a second module is used to detect lane markings on the road that are fed into the camera coordinate system and blended with other camera input data (front facing) then synthesizes a (perspective correct) image for display. Augmented reality (AR) tracking and depth perception are also discussed and ultimately contribute to the design of the AR HUD system that includes lane detection algorithms and smart notification for collision avoidance. Bottom line, look for improved automotive driver assisted systems (ADAS) that improve safety, and diminish extraneous information that distract the driver and the cost of safety. This session provided one of the most comprehensive discussions on AR HUD technology and is a very good read for those so inclined.

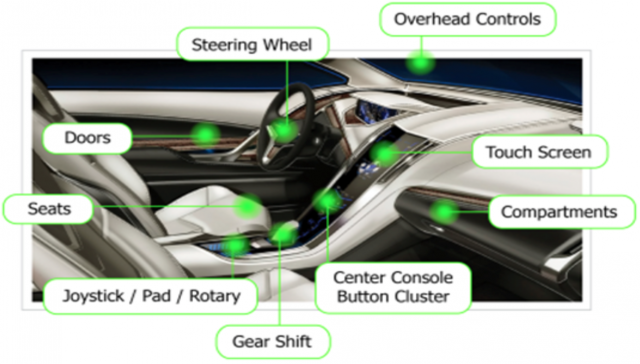

On the display interface side of vehicle displays, paper 53.3 from Chad Sampanes, Iva Segalman, Neil Olien looks at haptic feedback design that can assist with safely communicating vital driver information through a touch feedback. The talk started with an interesting study looking at user satisfaction with existing display systems and quoted the Consumer Reports 2013 survey that stated 56% of complaints about touch screens were due to unresponsiveness. This sets up the talks major focus, the usability of haptic feedback. For starters, in vehicle touch screens with a compelling haptic solution offer on-going communication to the driver during several types of interactions. According to Sampanes, the most immersive (engaging) user experiences “…are ones in which there is a synergy between what one sees, hears, smells, and feels. And he is quick to point out that this takes place within a limited field of view, due to the driver’s focus on the primary task of – well, driving. Examples are touch screens that also offer feel to the press, dials with clicks at various stages of intensity, both examples of going beyond the single dimension of the touch.

Haptic feed back is not just for displays anymore, the multiple surfaces in a car that can benefit the driver with augmented information to support the primary task of driving Haptics are also design direction malleable, they offer design alternatives that can be used to help create and support for example, expectations based on the type of car one drives. Here’s how Sampans puts it. “A touch experience can be creatively directed to evoke the experience of brand or moment from light-hearted whimsy to sophisticated austerity, (think VW Bug vs. BMW M-series race car). Here, managing the frequency, strength, latency, and pattern of the haptic experience can vastly affect the user experience (and emotion.) Finally he makes the point that haptic feedback can extend to areas not just in display design but to other surfaces like the steering wheel and other non-display components in the car and will ultimately be used to support a vehicles brand identity. So perhaps if you make a wrong turn in the future, the Mercedes steering wheel will haul off and slap you, (dummkopf!)

Haptic feed back is not just for displays anymore, the multiple surfaces in a car that can benefit the driver with augmented information to support the primary task of driving Haptics are also design direction malleable, they offer design alternatives that can be used to help create and support for example, expectations based on the type of car one drives. Here’s how Sampans puts it. “A touch experience can be creatively directed to evoke the experience of brand or moment from light-hearted whimsy to sophisticated austerity, (think VW Bug vs. BMW M-series race car). Here, managing the frequency, strength, latency, and pattern of the haptic experience can vastly affect the user experience (and emotion.) Finally he makes the point that haptic feedback can extend to areas not just in display design but to other surfaces like the steering wheel and other non-display components in the car and will ultimately be used to support a vehicles brand identity. So perhaps if you make a wrong turn in the future, the Mercedes steering wheel will haul off and slap you, (dummkopf!)

Also, on the SID Display Week show floor LCD fabs including Sharp and JDI, plus system integrators like Rockwell Collins prominently featured displays specifically targeting the automotive space. See our related coverage for more on this.

In short, the automotive display space fast becoming a high growth area moving beyond the niche space and into a major market for both display fabs and component resellers alike and from the looks of all this activity, we can say SID Display Week is responding. – Steve Sechrist