The Experience Hall was for start-ups and research projects (like the SID i-Zone) and we found a number of display-related projects at different stages of development.

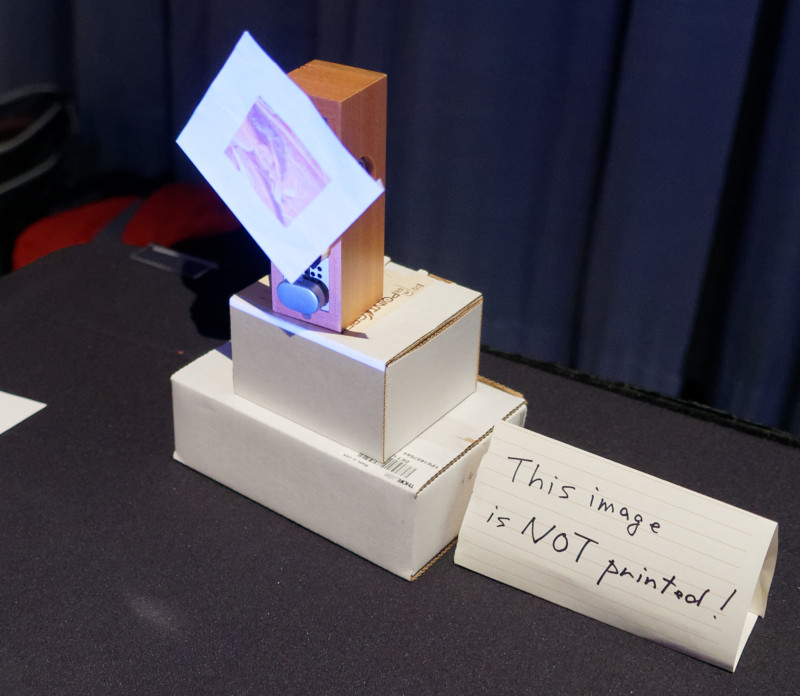

FairLight is a research project from Japan that uses the kind of virtual projection technology developed by Asukanet of Japan and applies it to images in water. The display might be used in museums or public places. The developer showed us how the image could appear to be floating in your hand, in the water.

This demo was of projection into water. Image:Meko

This demo was of projection into water. Image:Meko

Next, we looked at a technology from Lemnis Technologies of Singapore that is called Verifocal and uses the same kind of gaze and depth detection technology to adjust the optics in a VR headset to overcome the classic ‘accomodation/vergence conflict’ problem. The system continuously tracks and is expected to be used in AR and VR applications. It is compatible with SteamVR. As well as dealing with the conflict, the technology can be used to offer ‘myopia tuning’ so that headsets can be optimised for users that normally need glasses, so that they don’t need them.

At the moment, the company, which is working to a licensing model and wants to sell to makers, rather than building its own headset, is selling evaluation kits to ‘qualified buyers’ and wouldn’t tell us anything about pricing, etc.

Lemnis is trying to solve the convergence/accomodation issue. Image:Meko

Lemnis is trying to solve the convergence/accomodation issue. Image:Meko

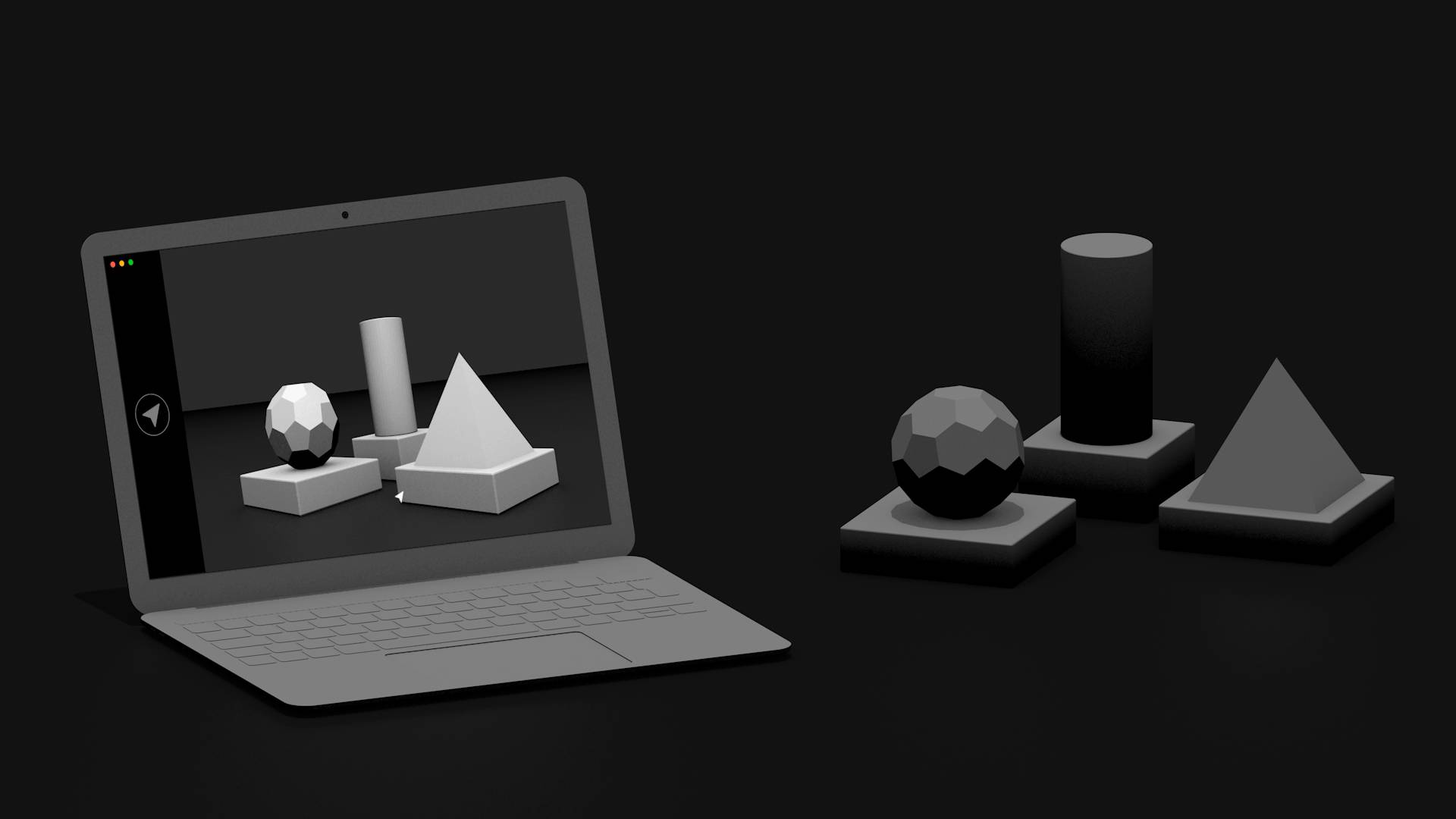

LightForm Inc had a more commercial offering than most of the demos in the Experience Hall and the LightForm LF1 is a system using structured light for projection mapping. The LF1 is a complete replay system, so after it is used, in conjunction with a PC, to create the mapping (which looks very simple), it can be used to provide the replay service.

The system is designed to be very easy to use and to programme, and can be purchased as a control device for $699, or fitted to an Epson 1060 projector for $1,499.

The company’s software can be controlled by ITTT IoT

Lightform has an interesting mapping device. Image:Meko

Lightform has an interesting mapping device. Image:Meko

Check this brief video on the mapping device. Image:Meko

Check this brief video on the mapping device. Image:Meko

Nagaoka University had a team at the event that was showing a light field display which uses a spinning ball that is covered with small ‘fly eye’ bulges. The image is projected using three projectors and is synchronised to the rotation of the ball and uses time-division multiplexing. The image produced was very low resolution, but the group believes it is scaleable. The display might be usable for art exhibitions and signage, the team believes.

This spinning ball with reflective bumps is a lightfield display. Image:Meko

This spinning ball with reflective bumps is a lightfield display. Image:Meko

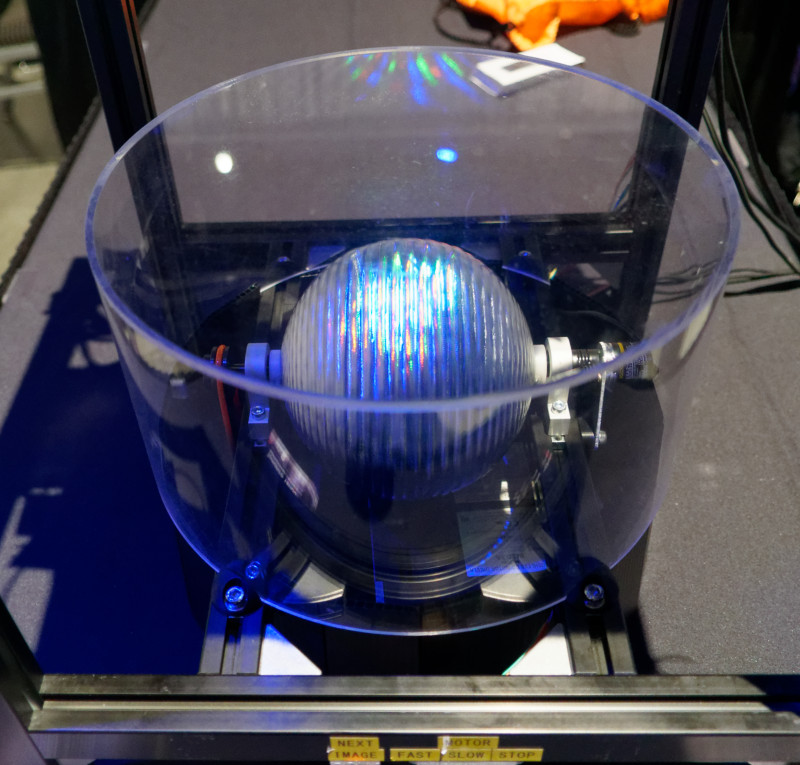

Canadian scientists from the University of Saskatchewan and the University of British Columbia have developed a system called CoGlobe that uses a spherical ‘fish bowl’ rear projection system for mixed reality displays in museums and other locations. Unlike similar systems that we have seen (e.g. at AWE Expo), the technology is based on multiple standard picoprojectors rather than a single larger projector with a custom fisheye lens.

CoGlobe uses three projectors to map inside a sphere. Image:Meko

CoGlobe uses three projectors to map inside a sphere. Image:Meko

There was a group from Sony’s Computer Science Labs, that had a wearable projector, the HeadLight, that could create an image (currently pre-rendered) that is displayed in space in front of the viewer as a kind of AR. It uses a fisheye lens to produce a wide angle image and processing is used to correct the distortion. It also corrects to map the image onto the surfaces in the environment both for ‘mapping’ and to ensure a consistent view from the user’s perspective. The group anticipates that the imagery could be made dynamic in future iterations. There is a good video online of the technology.

Sony had this projection AR research project. Image:Meko

Sony had this projection AR research project. Image:Meko

An intriguing development was a gaze-dependent system that allowed the use of a combination of gaze tracking and depth mapping to decide where the viewer was looking and adjusting some programmable lenses to create eyeglasses that compensate for vision problems such as presbyopia, the problem that causes far-sightedness in many older people (including your reporter!). The group uses commercial programmable lenses and is focusing (if you’ll pardon the pun) on the computational part of the puzzle as the project has been driven by the Computational Imaging lab at Stanford University. The group showed that it is the combination of the two technologies that makes performance good.

The system is not quite ready for consumers, yet! Image:Meko

The system is not quite ready for consumers, yet! Image:Meko

A group from Tohoku University was showing a single chip DLP projector that was running at 2400 fps and that could warp the image at that speed. The system was being used to dynamically map an image very accurately to very rapidly moving objects including a rapidly spinning screen.

The projector was mapping onto this rotating target. Image:Meko

The projector was mapping onto this rotating target. Image:Meko